AI is slated to be at the heart of 21st century technological developments. Will Israel, which was among the world leaders in previous waves of technology, successfully find its way to lead with this technology as well?

The Advent of the Smart Machine Era

AI (Artificial Intelligence) is slated to be at the heart of the 21st century’s technological developments. After several decades referred to as the AI winter, the present decade began with a long-awaited breakthrough in the field. The combination of powerful processors and a multitude of users with access to massive amounts of data, have created a critical mass that has launched us into the commencement of a new wave of technology. This new surge in the digital revolution is based on earlier waves: computability, connectivity, and mobility. Consequently, AI is also expected to become a GPT (General Purpose Technology) that will serve as the foundation for many future advanced tech applications that will revolutionize every aspect of our lives: Autonomous vehicles, personalized medicine, precision agriculture, mobile robots, computers that speak and understand natural language, and many other developments we cannot yet envision, will all be based on AI capabilities.

Accordingly, AI-based innovation is expected to be key for economic growth in companies, in sectors, and in countries that will be at the forefront of this technology. It is not surprising, therefore, that many countries have already announced their national AI strategy and are developing research infrastructure, human capital, and a supportive regulatory framework. Will Israel, which was among the world leaders in previous waves of technology, successfully find its way to lead with this technology as well?

Technological waves in the digital revolution

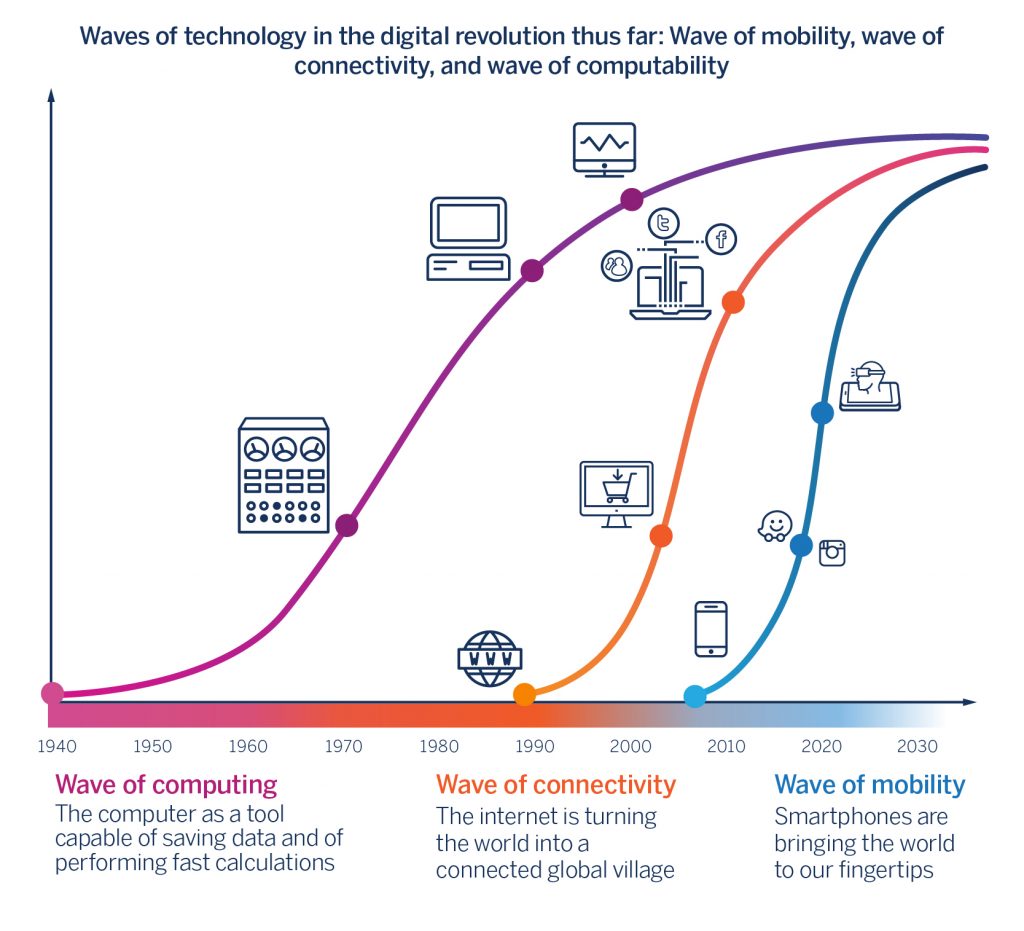

The digital revolution, which has gradually enabled the representation of nearly everything in the world as universally accessible digital data, is the most important revolution of the late 20th century, and most likely of the 21st century as well. But this transformation is not one- dimensional; it is built on the foundation of waves of a variety of core enabling technologies. An astute analysis of these waves allows us to understand when a wave of technology is dwindling down – meaning its rate of innovation is decreasing – and when another wave is emerging and its rate of innovation is skyrocketing. Economic growth in relevant sectors is closely tied to technological waves; it carries, therefore, considerable weight in innovation policies. In this chapter, we will propose a classification of the digital revolution into three waves: the wave of computability based on the computer, the connectivity wave based on the internet, and the mobility wave based on the smartphone. Of course, as in all models, here too, the attempt to simplify reality by dividing it into distinct parts can be controversial; yet we believe that this classification helps explain the significance of the present era as a crossroads in Israel’s innovation policy.

The common and most important thread in all of these waves is that they are GPTs. GPTs are technological platforms that serve as a basis for a range of tech products and services. As such, the wave of computability was the foundation for the development of supported hardware and of a great deal of software that boosted work productivity worldwide, from the operating system – which in itself serves as a platform enabling many other developments – to software designed for the end user, such as the word processor or the electronic spreadsheet. The wave of connectivity, which included browsers, search engines, and social media, helped users navigate the internet’s endless sea of data and connected people from all four corners of the earth. The wave of mobility – which was powered by key technologies such as GPS and cloud computing – paved the way for all of the apps designed for the mobile devices that we take everywhere we go, allowing the creation of the immense amount of data currently at our disposal. Successive waves were built on the foundations of preceding waves. The result is that most people on earth, especially in industrial countries, are in motion (mobility) with a computing device (computability) connected to most people around the world and have access to a great deal of human knowledge (connectivity).

nonetheless, the rate of innovation in these waves is slowing down, and it is likely that significant breakthroughs in these fields will become less frequent. The growth of computing and connectivity capabilities are reaching a saturation point, mostly due to the needs of end users, whose appetite for increasing capabilities at a higher price is diminishing. Innovation in mobility and its primary platform, the smartphone, has also been dwindling in recent years. Today, two thirds of the world’s population owns a mobile phone. In countries such as the US, Britain and Israel, the number of cellular subscribers is now higher than the number of residents, with 122, 120, and 127 subscribers per 100 residents respectively. While there is still room for the penetration of smart devices into developing countries, this wave appears to be approaching saturation.

The link between these waves has produced yet another consequence. Any of us who carries a mobile device connected to the internet is creating a large amount of data; collectively, we are creating a vast amount of data: 2.5 quintillion bytes of data every day (2.5 x 1018), and this volume of data is increasing rapidly. Big data created from these processes is compounded with the exponential growth of computing capabilities, and together have revived a technology that we had already eulogized as irrelevant: machine learning. Machine learning is the basis for the upcoming wave in the technological revolution – AI.

AI is taking center stage

The term AI was first coined in a seminar in Dartmouth College in 1956. It describes the capability of machines to simulate human intelligent behavior.1Alan Turing, a pioneer in computability theory, is likely to have been the first scientist to have posed the question “Can machines think?” in his classic paper from 1950. However, he immediately followed this question with the claim that according to its most common interpretation, the question is meaningless. He then replaced the question and asked if a computer can behave in such a way that an observer would be unable to ascertain whether it is a human or a machine After years of speculating that neural networks, a very rudimentary version of the human brain, would be unable to advance AI, there was a breakthrough. At the outset of this decade, researchers were running old applicable algorithms on powerful processors that did not previously exist, and discovered that the combination of computability capability and a large amount of data that had now become accessible, suddenly produced impressive results. For the first time, machine learning algorithms (especially those based on a method called backpropagation) successfully completed ‘intelligent’ tasks like identifying objects in an image, and quickly outperformed all other methods. Enthusiasm grew with the wide coverage of accomplishments, such as the AlphaGo software’s 2016 victory against the best Go player in the world – a game where even the most powerful computer in the world had been unable to methodically pass each stage of the tree search that the game entails.

These developments marked the commencement of the AI revolution that has been sweeping the world. no other technology seems to be equally terrifying and exciting to humankind. While other technological revolutions have helped us surmount limitations in fields that require physical strength, speed, and long-distance communication, AI touches upon the fundamental quality that has allowed the human species to rule the planet – intelligence.

Accomplishments in this field in recent years have created the sense that the day when machines become smarter than us is closer than we may have once thought. At the same time, there have been extensive debates on whether or not this development is a positive one.

Proponents of the ‘optimistic side’ of the debate view AI as a technology that will overcome the limitations of humankind and will spur unprecedented prosperity. They believe that all living systems – agriculture, health, transportation, manufacturing and commerce – will be conducted automatically by smart machines that will meet all of our needs. Smart machines will know how to study us and will adapt specific products or services that we may need. All ‘tedious’ work will be performed by machines, leaving humans to complete the tasks that require creativity, empathy, and human contact.

Proponents of the ‘pessimistic side’ of the debate, including prominent personalities such as Elon Musk and Stephen Hawking, caution that AI could lead to the destruction of the human race. They feel that imbuing a network of machines with an almost unlimited capacity for computing and storing data with learning capabilities, will make computers far smarter than humans. Our imagination can be carried away to horror plots – from countries using smart computers to take over the world, to machines subjugating the entire ‘inferior’ human race. Less apocalyptic scenarios envision that smart computers will replace us in a wide range of professions, leading to widespread unemployment. They also caution against an almost absolute eradication of our privacy: As more aspects of our lives are being linked to smart machines, huge companies or the governments that control them will know all about our actions, or even our thoughts.

Alongside these imagined scenarios – whether optimistic or pessimistic – many others, led by AI researchers, feel that the excitement may be premature. They point to the huge challenges the field is still facing, to the Sisyphic progress, and primarily, to most people’s confusion regarding AI capabilities. One such researcher is Rodney Brooks, one of the most experienced and prominent researchers in the field. In an article in 2017, he explains why most short-term predictions about AI are missing the mark.2Brooks, R. (2017, October 6). The Seven Deadly Sins of AI Predictions. MIT Technology Review He cites the capability of a deep learning algorithm to identify children playing frisbee in a photo – by all means, an impressive feat, considering the fact that the computer had ‘learned’ to identify these objects on its own. But if you were to ask that same software a few simple questions about the described scene, such as: How do you throw a frisbee? From what age can children play frisbee? Or, why do children play frisbee in the first place? you would not be given an answer that would be considered intelligent. In other words, the software can label the objects in the image, but it does not have any ‘human’ understanding of the described image.

What conclusion can be reached regarding the effect of AI on our lives? As is the case with other GPTs, the law that best describes future developments is Amara’s law, which states that we tend to overestimate the effect of a technology in the short run, and underestimate the effect in the long run. Public discourse is focused on the danger that AI will pose to many professions in the next few years, but is struggling to envision how, in a few decades, AI will completely transform our lives.

In the spirit of Amara’s law, we believe that the tech world is indeed at the outset of a new wave of innovation that will forge a link between several technological trends such as big data, machine learning, and IoT (Internet of Things), and will lead to the emergence of smarter machines. If the earlier waves of technology in the digital revolution connected people to one another, the upcoming wave will connect machines to one another, and will allow them greater decision-making capability and increasing autonomy.

These developments will amount to the creation of tremendous economic value, and the tip of its iceberg is already within sight. Any business process that undergoes automation and becomes even slightly smarter is slated to bolster the firm’s profitability. For example, a better understanding of the customer based on identifying their purchasing patterns, or the patterns of similar consumers, increases sales efficiency of better-suited products. The use of algorithms for identifying an image used in combination with images from satellites or drones, helps predict agricultural output and helps identify crop diseases and pests; computer vision capabilities enable better diagnostics of medical tests that previously necessitated a lab. These and other processes, that have already been implemented by thousands of companies worldwide, are expected to place these companies in a leading competitive position. In 2016, this realization led to CEO of IBM Ginny Rometty’s announcement that within five years, AI will impact all business decisions.3Recode. (2016, June 8). Full video: IBM CEO Ginni Rometty at Code 2016

In the throes of global competition

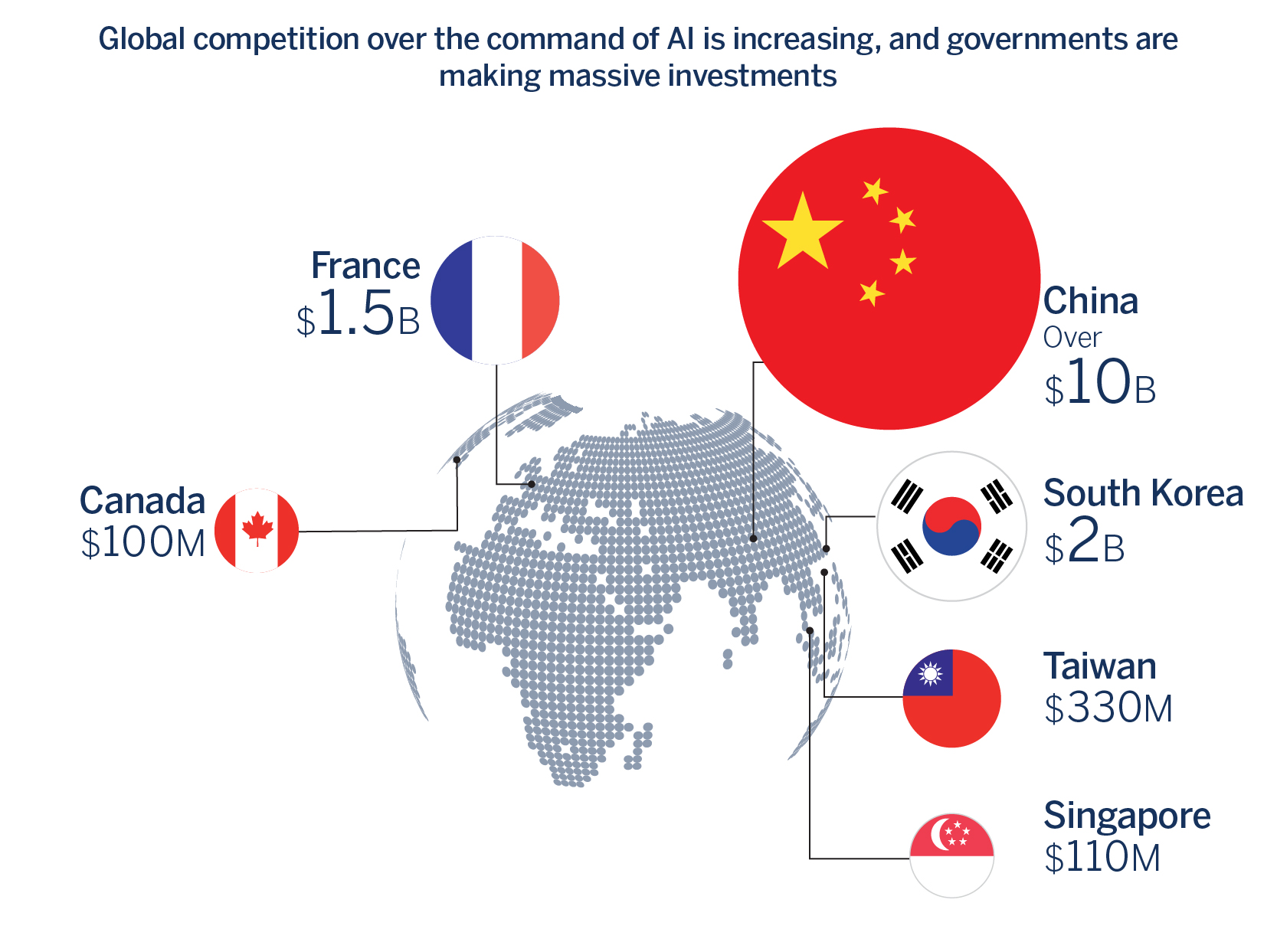

AI becoming the next enabling technology is a probable scenario. Countries and companies that lead this wave of innovation will then get the lion’s share of the ensuing profits, while those who lag behind will be forced to make do with the leftovers. In recent years, we have been seeing an increasing number of countries develop a national AI strategy. As of late 2018, 17 countries have already announced such a strategy,4Dutton, T. (2018). An Overview of national AI Strategies with some investing billions of dollars. This is a clear indication that the race for technological dominance in the field is in full force.

But why are governments throwing their hat in the ring of technological competition, when this competition is ultimately being driven by the business sector? For the most part, preliminary stages in waves of technology coincide with heavy, long-term investments in infrastructure that facilitate the development of core technologies. This infrastructure is too costly and its use is too broad for individual companies (excluding giant tech companies). As a result, any country that wants to take part in the race for global technological leadership hopes to be among the first to invest in this infrastructure. As outlined in this chapter, huge investments in infrastructure were typical to earlier waves of technology.

Another reason for governments to be key players in the race for global AI dominance pertains to two main obstacles that are currently hindering development in the field: regulation and human capital.

Regulation, under government scrutiny, is a fundamental condition for the advancement of the field. We are accustomed to humans making decisions about our lives. Consequently, the transition to accepting decisions made by algorithms, particularly in critical domains, calls for appropriate regulation. In AI, decisions are made by non-human entities. As a result, there is a shift in the concept of culpability, along with entire legal frameworks. A clear example of this phenomenon is autonomous car crashes. Autonomous vehicles are expected to reduce the incidence of traffic accidents by over 90%, but we still find it difficult to tolerate fatal accidents caused by algorithms. This means that regulators must address the question of culpability, and are up against a difficult dilemma: To protect the public, should there be a stringent threshold that requires the production of a retrospective account of the algorithm’s decision making process, or would it be better to lower the bar of culpability in order to promote the adoption and development of AI-based innovation with all its benefits?

Another issue associated with regulation is privacy. Machine learning yields results when applied to a massive amount of pertinent data. In most commercial applications that implement these processes, we, the users, ‘manufacture’ this data. In the era of connectivity and mobility, this data can convey a clear representation of our actions, our interests, and even our thoughts. An important milestone regarding this issue occurred in May 2018, when the EU implemented the GDPR (General Data Protection Regulation). Accordingly, every government should establish regulatory restrictions for this data collection.

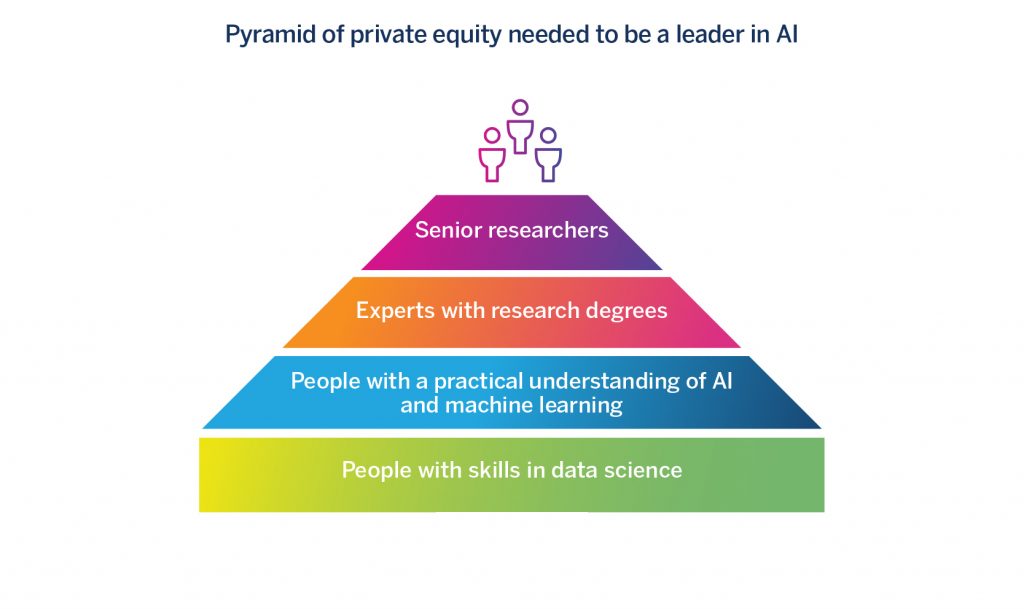

Another concern that governments must address is the workforce and the private equity needed in order to flourish in the age of smart machines. The workforce is expected to make a full transformation; the increasing demand for AI is slated to deem traditional human skills superfluous, creating high demand for new expertise. Expertise in STEM (Science, Technology, Engineering and Mathematics) will become increasingly important, in addition to soft skills such as problem solving, creativity, and emotional intelligence. These skills will provide a significant advantage to the people who possess them.5Deloitte. (2016). Talent for survival – Essential skills for humans working in the machine age While it is difficult to predict future changes with a high degree of precision, there is no doubt that in the future, workers will need to possess stronger capabilities in technological literacy and will need to be prepared for frequent changes.

Education systems in developed countries currently place an emphasis on STEM studies – especially on the study of coding. In Europe, for example, 15 EU countries already include coding in school curriculums.6Euroactiv. (2015, October 16). Coding at school: How do EU countries compare? The idea behind this policy is that coding skills should be instilled from a young age in order to reinforce the core logical and algorithmic thinking that will be required in many future professions. Coding is already transforming from a skillset limited to tech professions into a language that will become more pervasive throughout the entire workforce.

An AI Strategy for Israel

Israel was a leader in the earlier technological waves of the digital revolution. Technologies and capabilities in communication fields developed by Israel’s security apparatus in combination with academic excellence in coding fields put Israel in a strong position to take advantage of internet developments. Many leading Israeli companies that emerged in the 90s such as Check Point, Amdocs, nice and Mellanox, positioned Israel as a superpower in communications, security, data storage, and semiconductors. An additional component of excellence was added to Israel’s ecosystem – entrepreneurial culture. Thousands of startups established in Israel in the past two decades based on internet and smartphone platforms have made Israel fertile ground for innovative companies that respond promptly to developments in tech markets.

Past successes could indicate that the Israeli ecosystem of innovation will certainly lead the AI wave of innovation, even without special intervention by policy makers. We believe that taking this passive stance runs the risk of Israeli technology losing its lead.

Firstly, history dictates that often, countries have been technological leaders for relatively short periods of time. Japan is a clear example of this phenomenon. Japan was a technological superpower in the 70s and 80s and dominated the field of electronics, but it missed the wave of connectivity in the 90s and did not join the forefront of computing.

Secondly, it is important to remember that the Israeli ecosystem’s competitive edge was established, among other factors, due to correct policies that recognized and responded to technological developments and challenges in real time. Israel was one of the first countries to recognize the potential in encouraging innovation-based industry. The Office of the Chief Scientist – the entity that preceded the Innovation Authority – had already begun to bolster R&D in industry in the 70s, many years before the world had discovered the potential of innovation-based growth. In the 90s, the government focused on advancing Israeli potential through groundbreaking programs such as Entrepreneurship, Technology Incubators, and the MAGNET program. In these past decades, the Office of the Chief Scientist was one of the only government bodies in the world tasked with supporting technological innovation. However, the global scene of innovation policy changed beyond recognition in the past two decades. Today, nearly all developed countries, and a dominant group of developed countries, are heavily invested in technological innovation.

In particular, we must acknowledge the fact that we are already falling behind in the race for AI-based technological dominance. The heavy investments in AI infrastructure planned by governments that are cited in this chapter should be a warning signal for all of us. If appropriate resources are not allocated, and if we do not develop suitable tools to advance Israeli leadership in AI-based technologies, we risk lagging behind. Accordingly, we are calling for the consolidation of all sectors – government, academia, and industry – to establish a vision and a strategy on AI for the Israeli economy.

For readers proficient in Israel’s history of innovation policy, especially the policy of the Office of the Chief Scientist (the entity that preceded the Innovation Authority), this approach will look like a turning point. The Office of the Chief Scientist’s policy was technologically neutral, investing in R&D projects based on project quality alone, without prioritizing different technological fields. We should emphasize that the Innovation Authority’s policy is still in place, in its implementation of policy tools aimed at bolstering technological innovations that are ‘close to the market,’ meaning developments that are driven by economic competitiveness. In contrast, the extensive investment in technological infrastructure and generic developments associated with new waves of technology such as AI is ‘far from the market.’ At this stage, market forces are not operating at an optimal level, necessitating targeted government intervention. It is important to establish an active strategy for the development of targeted infrastructure that will allow the industry to develop AI-based products and services that meet market demand.

This strategy should respond to several key challenges. The first challenge is to reinforce research infrastructure in AI fields in academia, and to turn Israeli research universities into AI excellence centers. Currently, the most advanced AI algorithms are developed by academia, and serve as the basis for groundbreaking applications in the industry.

The second challenge is the nurturing of all the human capital required in the field. The top of the pyramid represents senior researchers who specialize in algorithms. These researchers are needed both in academia, for advancing research and cultivating the future generation of professionals, and in industry. Tech giants offer them huge salaries. In the US, for example, the phenomenon is already posing a threat for research institutions. As a result, it is important to increase the supply of these researchers and to offer them attractive incentives. next in line is AI professionals with research degrees. The demand for these professionals who possess a broad scientific foundation is already high, and they serve as a channel for knowledge transfer between academia and industry. In addition to these professionals, there is high demand for a workforce with an understanding in AI and machine learning for positions in application development, and for a workforce trained in data science7The Samuel neaman Institute. (August 2018). Artificial Intelligence, Data Science and Smart Robotics – a phenomenon we described at length in the chapter titled High-Tech in Israel, 2018. It is important to note that data science, which serves as a critical foundation for AI, is also classified to several segments. While academia is a key training channel for tech application positions at the base of the pyramid, additional evolving channels under the auspices of industry entities and extra-academic institutions are slated to play a critical role in expanding the supply of human capital in the field.

The third challenge is the development of R&D infrastructure that would serve both academia and industry – particularly computing power and data infrastructure. Two prominent data sources are the government and giant tech companies.8Ibid Consequently, it is important to ensure that government data is made accessible to researchers, entrepreneurs and companies, without losing sight of privacy and transparency concerns. It is also important to create appropriate incentives to encourage open innovation for multinational tech companies that operate R&D centers in Israel and that manage private big databases. A field in which the government has already begun operating for the creation of advanced data infrastructure is healthcare, as we will describe at length in the following chapter.

Another important challenge is the penetration of AI technology in all branches of the economy. The vast economic value of AI does not end with the potential it offers to leading future high-tech industries, but also, or even primarily, in the increased productivity and improved wellbeing that it can offer in all aspects of life. As we previously stated, it is critical to encourage the implementation of technology with applicable regulation and with the training of human capital that would be equipped to take on imminent workforce challenges.

Despite the substantial threat posed by the growing global competition, we believe that Israel has an excellent chance of being a technological leader in the era of AI. Israel’s system of innovation is mature and sophisticated: Israeli academia is at the forefront of global knowledge in computability, its security apparatus creates advanced technologies and skilled human capital, Israeli entrepreneurs stand out in their daring and their innovation, and the Israeli high-tech industry is flourishing and evolving. While a small country like Israel cannot compete with huge investments in China, in Google or in Amazon, over the years, Israeli companies have managed to be technological leaders in exclusive fields, and have been able to compete with resource-rich organizations. These are all important assets that will help Israel get on board with the next wave of technology – the wave of AI – if we succeed in paving the way for the industry.